Vipin Choudhary

When we start a DevOps journey in an organization, there is excitement to work on different tool-chains, processes, and in an overall mature culture. It’s a completely different experience where the objective is to speed up the delivery process with innovative ideas and an agile way of working. A DevOps transformation gives us a new perspective on:

When we start a DevOps journey in an organization, there is excitement to work on different tool-chains, processes, and in an overall mature culture. It’s a completely different experience where the objective is to speed up the delivery process with innovative ideas and an agile way of working. A DevOps transformation gives us a new perspective on:

- Continuous integration workflow that helps different product teams with automated pipelines where we can see different modules are well integrated within a single view.

- Continuous deployment where agile infrastructure (whether over cloud ecosystem or on-premise) makes our life easier without thinking about the infrastructure and configurations for different stages.

- Continuous monitoring solutions which can give us insights into any application to complete the feedback loop of DevOps and agile.

- Continuous tracking with multiple issue tracking systems to keep track of moving features within different stages.

Over the years, we have built various portals and tools to support different teams within the organization like Build tools, Code Quality dashboard for every project, monitoring dashboard for the application supported by the Cloud console, etc. But one thing that we realized later on was that we have created so many powerful tools within our ecosystem that now we need to think of how to manage these tools effectively. Building these powerful tools will be an enigma if we are not securing them with automated security guidelines and clear responsibilities. Let’s take a situation where:

- Developers are now getting automated pipelines, however where is the lifecycle, how is it linked to deployed features, and most importantly are we building quality of code?

- Environments are provisioned in an automated way over Cloud, but who is controlling them when we are done? Have we chosen the right scale of the environment to control the cost? And when teams are busy in deploying new features, who is monitoring the security within the newly created infrastructure?

- Monitoring solutions give insights into applications in terms of what is going right or wrong. In addition, it should also provide an understanding of how well we are able to reap the benefits of analytical data within the defined KPI for every layer? For instance, how the application is behaving over all in terms of user interest, usage of various features, etc?

- Enterprise issue tracking system is in place, but how reliable is it to track all the features back and forth automatically? Whether or not it records which user updated the information manually at various stages within it?

- Other parameters such as missing audit trails, high cost, compliance, unmanaged vulnerabilities, difficulty in identifying the problem in the entire landscape - What else it can be?

Exhaustive, right? We have tools for everything however we ignore the most basic fact: Without proper orchestration, these tools are merely independent instruments to support only a limited area. Basically, we have implemented toolchains for every layer which is an integral part of DevOps as well, but we forgot to bind them together to maintain their pulse. And now we look for something to control this powerful environment with defined roles and responsibilities.

Service Catalog

Imagine a portal where you simply login and it will guide you on what you can do within the complete software development ecosystem, that too without worrying about guidelines and compliance. Build this portal where, under one single umbrella, you are able to hook up all required DevOps toolchains. Consistent governance and compliance requirements need to be converted into binaries in the form of a service catalog, where users are able to organize, manage, provision, and monitor the maturity of the processes based on the respective defined roles.

Following are a few requirements to keep in mind:

- Code maturity dashboard to see how different projects teams or vendors are writing their code on various parameters defined by software guidelines.

- Controlled provisioning of instances over the Cloud with defined security guidelines over Cloud. These limits could be, the number of instance/servers, scale of instance/server, resources, etc.

- Monitor solutions to display common metrics of all the application and measure these effectively with insights over their adaption within the business

- The dashboard revealing cost statistics and optimization areas at various levels such as project, user, infrastructure, etc.

- Automated updates within the integrated system for various updates within the system.

- Intrusion detection as well as an effective preventive mechanism for both the software and the application.

Now the question arises: With this catalog, are we trying to reinvent the wheels which were already created by different providers? For instance, the cloud console, monitoring dashboard, build tools UI, etc.

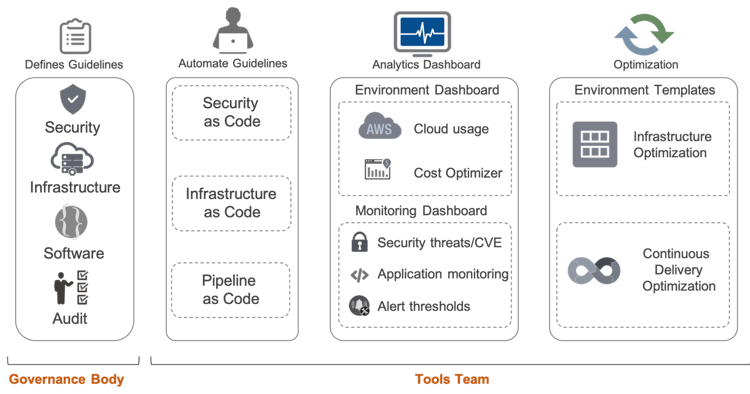

No. We just extended services of those providers and integrated them into one portal with the help of their own exposed API. An organization’s governance body helps set up the guidelines whereas the DevOps evangelist helps convert those guidelines (Security, Infrastructure, Software etc.) into binaries within the Tools team of an organization.

How do we plan this?

Step 1: Define guidelines

Every organization already has a compliance officer, who takes care of various guidelines in different areas such as security, infrastructure handling, software development, and the audit process. This means you are already on step 1, otherwise, you need to define these guidelines or adopt them based on the existing definition by a standardization body like the CIS (Center of Internet Security).

Step 2: Start converting guidelines into code

This is perhaps the most challenging aspect where you may need a new brain within your organization who can build this completely new automation while supporting the organization's culture. A DevOps evangelist will help here in bringing the following concepts:

- Security as code which you have to inject within the software as well as Infrastructure with predefined automated guidelines.

- Infrastructure as code to automate the process of creating the infrastructure for development as well as production environment with the help of scripts that minimizes human errors and mark your environment as compliant.

- Pipeline as the code where software development teams can use predefined modules while working on existing build pipelines. These include modules like, monitoring integration, collaboration tools integration, ITSM tools integration, etc.

Step 3: Measurement

Automated measurement mechanism is the only way to measure the success of any DevOps implementation. This is where we need one analytics dashboard which continuously measures various matrices for the entire automated environment. This dashboard should also be able to integrate with existing monitoring solution and map key values of the existing system with business goals.

Step 4: Optimization

Continuous improvement based on continuous feedback is the success mantra for the Continuous DevOps approach. It is the key that provides you with a competitive advantage and helps businesses maintain continuity.

Conclusion

Within DevOps, there is no perfect solution and we need to evolve our process, mindset and toolchain on time to keep it rolling. These DevOps toolchains are certainly a boon to the IT sector, at the same time they are evolving on a regular basis and getting more and more powerful. What we need now is more control on these tools and to make this journey successful.