Let’s begin by considering a few scenarios: How about deployment to physical servers? Or having to make additional space in racks while scaling? Or deployments twice a year? While all this might sound “outdated” now, it is necessary to talk about them right here in the beginning, just to highlight the evolution and how far we have come as an industry. To be able to fully appreciate where we have reached and what we have achieved, it is important to know where we come from.

.png?width=703&height=307&name=Devops%20piplline%20illustration%20title%20(1).png)

The state of the industry in 2022 is the biggest testimony to the vision of shift left and reaching the market at digital speed. We ought to be glad that knowledge of using cloud services, deployment good practices, and use of “DevOps” is a common practice these days. All organizations of note use them, more so in Nagarro as we have projects of almost all scales and capacities. DevOps can improve the value delivered by IT and allow organizations to react quickly and effectively to market pressures. Quoting Gene Kim from the popular DevOps novel The Phoenix Project, “Improving daily work is even more important than doing daily work.” and DevOps practices help with exactly that.

Before moving towards specific details, we must first ensure the following prior to beginning with DevOps practices in any project:

- Values and Philosophies that frame the DevOps process

- Procedures and practices of DevOps

- The Prescriptive Steps

Here, the focus is on DevOps Pipelines, which is a part of the prescriptive steps one needs to take to complete the integration of DevOps practices in any project.

Now emphasizing the literal meaning of a pipeline, the most common answer would be “a line of pipe with pumps, valves, and control devices for conveying liquids, gasses, or finely divided solids”. The points that demand focus here are the pumps, valves, and control devices. Let’s try to establish an analogy here - we can imagine having a digital pipeline to deliver our raw material, which is code, to the production environments automatically and continuously, after all the necessary stages, tests, and quality checks, in a free flow without much manual intervention to accomplish the stages and checks.

When it comes to building DevOps pipelines, no two projects have the same requirements. This is why, it might not be correct to have a fixed template of a pipeline as the correct or even the only solution to establish one for a project.

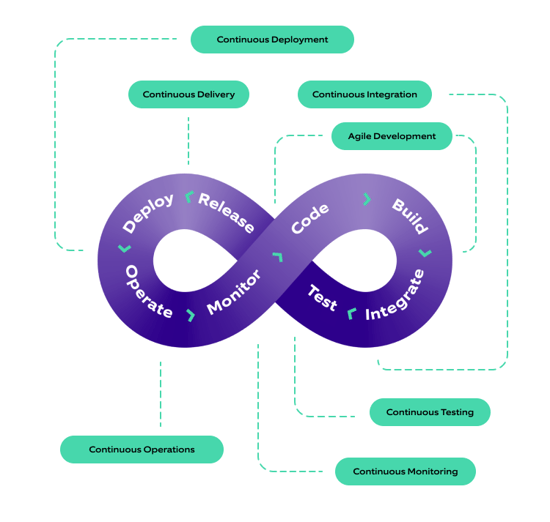

Through this article, you can understand the number of DevOps pipelines a project can have, the significance of each of them, and why they should be customized. This is more of a guide for those who have basic DevOps knowledge and are struggling to establish the right pipeline/pipelines for their project to kickstart with the DevOps processes. To achieve 100% automation of the DevOps processes, you must automate the following DevOps stages:

- Infrastructure creation

- Continuous Integration

- Continuous Testing

- Continuous Deployment

- Continuous Delivery

- Continuous Operations

- Continuous Monitoring

In order to achieve complete automation, teams can have multiple pipelines. It also depends on the number of environments a team is using. Most organizations usually have Dev, QA, Staging, UAT, and Production environments. and the deployments generally take place on the cloud servers. For ephemeral environments like Dev and UAT, we won’t want the environment to remain up all the time and consume resources. They can just be used only when we want to perform any testing activities. After the testing, we can destroy that environment and create it again when required. For such environments, we can have pipelines that will comprise infrastructure creation as a prerequisite to the deployment step every time a deployment happens.

For environments like Testing, Production, or Staging, where we need them to be available all the time, we can have separate pipelines - one each for infra creation, continuous integration & testing, and Continuous Delivery and Deployment.

Let’s investigate some of these DevOps pipelines:

Infrastructure creation pipeline

This DevOps pipeline will generally be about creating the required infrastructure. For environments like production and testing, the infrastructure is not created/updated very frequently. We primarily create the setup only once, at the beginning of the project. Subsequently, we only update it as per any scaling, compliance, or regulatory requirements. In such cases, we can have a separate DevOps pipeline on infra creation that we can run to create the resources needed for the application.

Some important things to ensure while maintaining these DevOps pipelines.

- Always try to record and store the current state of the infra and use the same state when trying to update the resources next time using the pipelines. Infrastructure-as-code (IaC) creation tools like Terraform achieve this by maintaining a state file, which keeps track of the resources created by using this tool for any pipeline execution.

- Since the IaC files will be a part of a repository, access to this repository should be limited as any mess-ups in the infra file may go on to create unnecessary resources or modifications in the existing resources. This might lead to application outages or downtimes.

- If we want our IaC repository to be integrated into CI/CD, we must ensure that only the right people have access to these DevOps pipelines, with proper approvals at all the required stages. Even a single code commit can start the execution, which will cause all the resources to be created again in the production environment.

- Additionally, we must be very cautious and careful while testing the changes locally. Local code might still be referring to files with data for a production environment. Executing infra creation locally might consequently result in infra creation in any of the referred environments as well.

- If CI/CD is implemented for the infra DevOps pipeline, make sure you have proper unit tests and integration tests to verify that the right resources are created. Assert that the infra does what it is expected to do and then destroy it again, before making the actual deployment.

- Any resource-to-resource interaction like the app server being able to interact with the database or accessing credentials/keys/secrets from a vault should be working when the infra is provisioned using the pipeline.

CI/CD Pipeline

Projects can have a single pipeline for CI/CD, or separate CI and CD pipelines, depending completely on the project requirements.

The Single Responsibility Principle of software development can be applied to DevOps pipelines as well. The more tasks we add to the single pipeline, the more dependencies it will have, the more complex it will become. As a result, when the project matures, it becomes difficult to modify it quickly.

When splitting a CI/CD pipeline, you must keep these things in mind:

- The CD pipeline should know when it should run.

- Where and how will the CD pipeline get access to the files of the CI pipeline?

- How frequently do you need to deploy to production?

- Are approvals needed for deployment to production? What is the hierarchy of the required approvals?

CI Pipelines

Let’s focus on the Continuous Integration pipeline. The CI pipeline primarily focuses on a) integrating the code committed by multiple developers into a shared codebase repository and b) on making it ready for deployment as a build or package. Now, you might argue that this can even be done directly through any version control tool. However, the beauty of having a pipeline is that the code merges into the main shared repository only after it passes the required tests and is found fit. This provides a quick feedback about the code’s quality and its impact on the existing codebase, hence saving time and money.

The primary phases of the CI pipeline will be to compile and build the code with the new changes. This will help determine issues or conflicts, and in testing this build against the set unit and automated test cases. Some project-specific pipelines might need this DevOps pipeline to push the latest build to a container repository as well, which can then be utilized for direct deployments later. The Continuous Testing phase can also be integrated with the CI pipeline by integrating the execution of unit tests, automated integration tests, and automated UI tests before the code is merged or before the build is considered ready for deployment.

CD Pipelines

CD stands for Continuous Deployment and can also be referred to as Continuous Delivery, as per the context in which it is being used in the project. The prime focus of this phase will be to take the build that passes all the automated tests and release it into the desired environment.

This pipeline is called a Continuous Deployment pipeline only after it makes a direct deployment into the production. This kind is also called a Continuous Release DevOps pipeline. It has often been seen that not all builds are worthy to be deployed in production. On such occasions, we might want to do a continuous push to some build repository/container. We can set up flows in the pipeline, where the build can be made ready for production. But the actual deployment needs a manual trigger or approval to make the release to the production environment. This modified pipeline can be called a Continuous Delivery DevOps pipeline. Therefore, if we implement Continuous Deployment, Continuous Delivery is also achieved but the vice-versa is not true.

While doing Continuous Deployment, the pipeline can either directly pick the latest build created by the continuous integration pipeline and deploy it to the desired servers or it can pull from the repositories to which we push the final build.

Continuous Monitoring

This comes at the end of the pipeline once the code is deployed in production. This is not evidently part of any of the above DevOps pipelines. Continuous Monitoring is a completely different setup provided by most cloud providers (Azure monitor, AWS Cloud Watch, etc.) or other technologies like ELK. It provides feedback on how the software is performing, specific issues, any performance anomalies, etc. It keeps giving real-time metrics, which can be used for analytics. Continuous Monitoring also informs about any security breaches or possible threats by raising alerts. Continuous Monitoring can monitor the entire infrastructure, application, and even the network.

Best Practices to follow while creating project DevOps Pipelines:

- Keep a rollback strategy ready. If containerized deployments are done, then the rollback can happen quickly and easily, by using the last released snapshot from the container. But if major changes have already been made post-deployment and the database changes made are incompatible, we need to get our hands dirty by restoring the DB and other affected resources.

- Use key vaults or secret file libraries to store passwords and secrets that need to be passed to the pipeline to create resources.

- Use pipeline variables to pass values to different pipeline tasks to make the pipeline more flexible and useful for deployments to multiple environments.

- Use pipeline caching feature to reduce the execution time of the pipelines by saving time to download dependencies every time.

- Use pipeline options to automate the creation of issues on build failures.

- Keep an eye on the DevOps metrics like Lead Time, Deployment Frequency, and Mean-Time-To-Recovery.

As more and more projects are moving towards DevOps practices and implementation, a lot of people are enhancing their skills and moving towards achieving a fully automated CI/CD pipeline. Many projects have already achieved this automation and will be good Role-Models for us to follow and seek help from. DevOps is not a mere process or method, it is a cultural change that everyone needs to accept to achieve high scalability and high availability.

Having faced the same struggles on these same lines while starting with DevOps, I wrote this article to share my experiences and learnings on topics like how to start with DevOps Pipeline creation, how many pipelines are required, which CD to go for - continuous deployment or continuous delivery, and what DevOps best practices to follow.

Perhaps it might be best to conclude this article with another quote from The Phoenix Project by Gene Kim: “Remember that there are a lot of experienced people around you who’ve been on similar journeys, so don’t be the idiot that fails because he didn’t ask for help.”

If you are looking to optimize your DevOps potential and capabilities, you can always count on Nagarro’s DevOps experts.