There is no doubt that Microservice architecture can help in efficient scaling and utilization of resources, and aligning your services with business domains. However, deploying and tracking Microservices on a cluster of hosts and monitoring resource utilization gets increasingly difficult, if done manually. This is where an orchestrater is needed. There are popular options like Mesosphere’s DCOS, Docker with Swarm mode, and frameworks like Spring cloud, Akka.io, Service fabric etc. that inherently supports implementation of Microservices. But, let’s explore approaches around AWS EC2 Container Service (ECS), Kubernetes and Lambda functions for deploying and orchestrating Microservices over Amazon Web Services (AWS).

There is no doubt that Microservice architecture can help in efficient scaling and utilization of resources, and aligning your services with business domains. However, deploying and tracking Microservices on a cluster of hosts and monitoring resource utilization gets increasingly difficult, if done manually. This is where an orchestrater is needed. There are popular options like Mesosphere’s DCOS, Docker with Swarm mode, and frameworks like Spring cloud, Akka.io, Service fabric etc. that inherently supports implementation of Microservices. But, let’s explore approaches around AWS EC2 Container Service (ECS), Kubernetes and Lambda functions for deploying and orchestrating Microservices over Amazon Web Services (AWS).

Key considerations for solution design include cluster management, monitoring and scheduling services, service discovery and auto scaling.

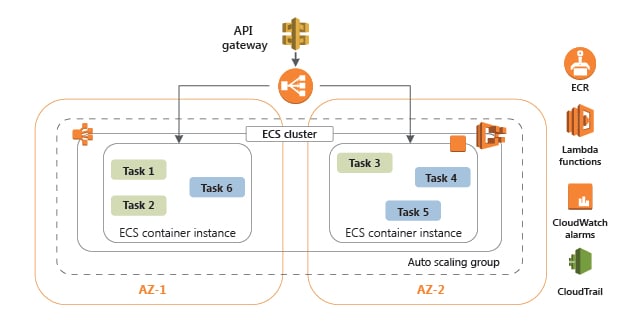

1. Dockerized Microservices on AWS EC2 Container Service (ECS)ECS facilitates running containers across multiple availability zones within a region. The task definitions help you specify Docker container images to run across the clusters. This solution provides native support to store and pull Docker images using AWS ECR (SaaS based). The diagram below depicts how ECS can be used to orchestrate Microservices hosted in Docker containers:

- Offloads complex cluster management and orchestration of containerized Microservices.

- Automates monitoring of cluster and services (set of ECS tasks) via CloudWatch.

- Auto scales AWS EC2 instances in ECS cluster.

- Auto scales tasks (set of running containers as a unit) in ECS service.

- Vender lock-in.

- Custom implementation required for automatic service discovery using CloudTrail, CloudWatch and Lambda function.

- Currently task level auto scaling is available in a few regions only. For other regions, a custom solution needs to be developed using AWS Lambda, SNS and CloudWatch.

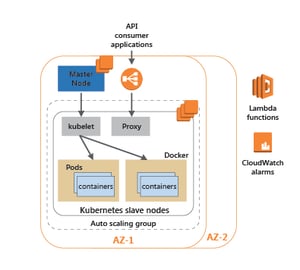

2. Dockerized Microservices on the EC2 cluster with Kubernetes as the orchestrator tool

Kubernetes is an open source container orchestrating tool. The diagram below shows how Kubernetes can be deployed over AWS while managing containers in EC2 clusters. Kubernetes master node is responsible for monitoring and scheduling of slave nodes where “Pods” are hosted. Pods help you specify Docker container images to run across the clusters.

Key benefits:

Key benefits:

- No vendor lock-in. Same solution can be deployed on cloud as well as on premise.

- Offloads complex orchestration of containerized Microservices.

- Automates service (set of Kubernetes pods) discovery through “kube-dns” package.

- Monitors and auto scales pods (set of containers in Kubernetes).

- Custom implementation is required using CloudWatch metrics and Lambda function to achieve auto scaling of the EC2 cluster i.e. registration and de-registration of EC2 instances in the Kubernetes cluster.

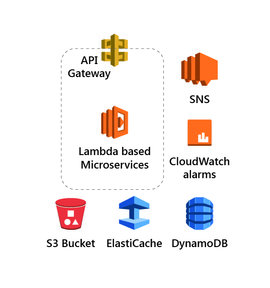

3. Microservices implemented as AWS Lambda integrated with AWS API Gateway

Container based Microservices help in better resource utilization; however, at times too many servers are required to coordinate all the Microservices efficiently, especially in cases where functionality is light weight. In such a scenario, AWS Lambda comes to the rescue. AWS Lambda functions, allow you to create serverless backends. The diagram here illustrates Lambda functions as the compute option plus Amazon API Gateway to provide an HTTP endpoint, while storing data in S3 bucket, ElastiCache and DynamoDB.

Key benefits:

Key benefits:

- Easy to build and deploy; no servers, no deployments onto servers, no installed software of any kind.

- Pay only for resources used in the execution, not for any continuously running servers. This results in cost savings, especially where the load is highly variable.

- Automates service discovery through integration with API Gateway.

- Scales automatically based on incoming requests.

- High availability for both the service itself and for the Lambda functions it operates.

- Automatically monitors Lambda functions.

- Vender lock-in.

- Limited troubleshooting and debugging support.

- AWS Lambda has a few limitations.

- All calls must complete execution within 300 seconds.

- Default throttle of 100 concurrent executions per account per region. For other limitations and details refer: http://docs.aws.amazon.com/lambda/latest/dg/limits.html

Based on the current market trends and our experience, Kubernetes is the most popular orchestrating tool for running dockerized Microservices in production, followed by Swarm and AWS ECS. However, it is important to consider the business need and string of factors to reach a suitable solution. For example, one of our clients, who is counted among the world’s largest management consultancy firms, had workloads on AWS and required a solution that would inherently manage high variability of load across their services. We proposed containerized Microservices orchestrated via AWS ECS to achieve fine-tuned scaling and utilization of underlying hardware. AWS Lambda was also leveraged with this solution to implement light weight Microservices. For another client for whom, vendor independence and ease of future migration were top priority, we proposed a Kubernetes based solution. As a solution provider we consider factors like existing solutions, flexibility required in infrastructure monitoring and management, ease of platform migration and more.

Adoption of Microservice architecture brings many advantages but can add complexity to system landscape. Thus, selecting the right orchestration tool for your business specific needs is utmost, and the approaches discussed here will certainly help achieve the same.

Containers, Lambda, Microservices, Cloud, Aws, Dockers, Cloud services

Vivek Agrawal

Containers, Lambda, Microservices, Cloud, Aws, Dockers, Cloud services