Houssemeddine Ghanmi

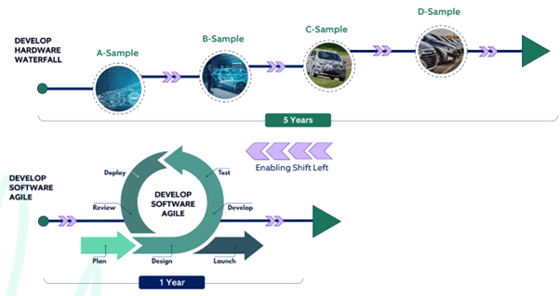

Developing high-quality vehicles is an incremental process that requires testing early and often. With vehicles becoming more intelligent with dependent components, testing has become even more significant. The sooner you test, the higher are your chances of being able to fix bugs in time to avoid any recurrence of features. But testing comes with its own challenges. This blog helps you lay out your strategy for Shift-left testing, which has emerged as a savior for the automotive industry.

Fig 1: Vehicle development lifecycle

Vehicle Development Process

Today, the core functionality of cars is primarily based on the software that runs on embedded computers known as electronic control units. Since software plays a key role in car development, developing these ECUs is vital to car development.

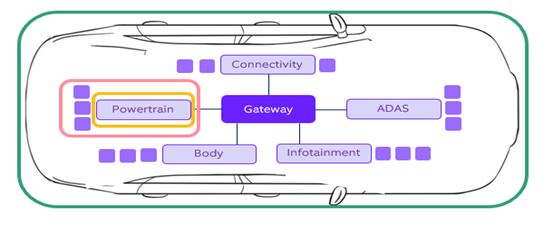

Fig2: ECUs as E/E architecture

The development of ECUs is divided into sub-tasks called features, which are further divided into smaller, sequential backlogs. Backlogs are collections of smaller features derived from larger ones. For example, object detection may be a smaller component of a larger feature like active cruise control.

The V-Model defines releases sequentially, as shown in the figure below. Each release has three main tasks. The process begins with understanding the requirements and architecture of the planned features. After this, the features are developed and implemented in the relevant components. Finally, the functionality is verified in the component, and its domain is validated at the vehicle level.

-1.png?width=565&height=317&name=MicrosoftTeams-image%20(47)-1.png)

Fig 3: V-Model

While the implementation happens on the component level, the verification and validation happen at three levels – the component, its domain, and the complete system.

The three-level test is mandatory because vehicle functions are distributed over more than one component and often across multiple domains. For instance, the driving assistance function works by a) using sensors like the camera or radar, then b) processing that information in a central platform to make decisions, and c) finally, carrying out those decisions through actuators and display. A key characteristic of the V-Model is the sequential execution of the tasks by the departments involved, as shown in the preceding figure.

Verification and validation

As the figure below illustrates, the defects discovered in the verification phase of the first release (R1) are reported to the development team with the hope that they will be fixed in the second release (R2). While other teams verify the R1, the development team already begins working on the R2. As each release has an allocated time, scheduling the bug fixes for the defects reported in later stages of R2 will shift the planned features of the second release to the third release (R3). These features are then shifted to the next release, eliminating the possibility of verification and validation in the current release. -2.png?width=750&height=422&name=MicrosoftTeams-image%20(43)-2.png)

Fig 4: Releases and development tasks

The earlier a defect is reported, the easier it is for the development team to manage and schedule its fixes in the next release. There have been occasions when delayed reporting of defects has caused a rollback of some of the already-developed features. Reverting a feature in the vehicle software development cycle means undoing or rolling back a feature to its previous state, effectively removing it from the current version of the software. This occurs due to issues with the feature or because the feature was not meeting the desired requirements or standards. Surely, no one wants a rollback, especially after putting in all the hard work of developing, testing, and releasing a feature.

There’s another flip side of a rollback. Organizations usually have limited resources for fixing bugs as testing current releases take up most of the resources in the later stages of the development cycle. Developing, reverting, and reprioritizing features eats up time allocated for other tasks.

As vehicle functions get more interconnected and dependent on each other, shifting features will further change the domain and system integration of all dependent features. This will increase the time spent identifying related features, rescheduling integration activities, and filtering defects caused by dependencies. As the components and domains become more dependent on each other, it also increases the probability (and risk) of companies losing track of all the shifted features. This is especially apparent in domain and system integration, which delays reporting bugs. Pushing features back with their dependent ones over the development cycle of several releases will lead to the accumulation of bugs and control loss, along with a longer development time for vehicles.

“Test early and often”

The earlier any bug is found, the fewer problems in fixing it. However, the testing process is, well – truly testing! It comes with its fair share of challenges. Here are some of the main drivers of the complexity of the testing process:

- Complex customer functions spanning different components and domains.

- Adopted service-oriented architecture: Service discovery enables real-time communication between Electronic Control Units (ECUs) in a vehicle, making it easier for OEMs to configure the vehicle and connect to backend services. However, it can also limit static testing to only component and domain levels, leading to potential bugs being discovered only later during the verification phases.

Organizations can adopt different methods to increase the efficiency of the testing process. For example, they can perform smaller, incremental testing by breaking down a large, expected outcome into smaller parts, reducing the testing time required.

Nagarro has developed a cloud-based solution for an automotive player to test in the loop systems. As shown in Figure 7, this solution connects existing hardware in domains such as ADAS, infotainment, driving, and connectivity. It performs end-to-end tests on features like driving assistance functions. The main objective is to minimize the reliance on test vehicles and avoid adding new test racks.

-3.png?width=593&height=333&name=MicrosoftTeams-image%20(46)-3.png)

Fig 5: Small V model compared to a Big V model

Some of the other testing methods are:

Simulation-based approaches: Simulation-based approaches use virtual models to replicate real-life scenarios. This includes data-based digital twins and virtualization of the target at different levels, such as hardware, operating systems, middleware, or even application. The goal is to make testing more flexible and independent from physical hardware and test vehicles.

Explorative test coverage: Improve test coverage by using self-learning and exploratory methods. As per data from previous tests, focus testing efforts on areas that are known to be high-risk or high-impact areas.

Shadow mode implementation: Shadow mode implementation involves running a new feature alongside the existing system. But its output is not used in the actual production environment. Instead, the shadow mode implementation results are monitored and compared to the output of the existing system. This allows developers to test the new system or feature in a real-world environment without impacting the production environment. Shadow mode aims to identify any issues or problems with the new system or feature before it is fully integrated into the production environment.

Conduct self-learning tests at each level by adjusting test cases and data based on previous results.

Shift late-stage testing to earlier phases by using simulations, API testing, and existing test systems: Connect component test racks to the cloud to create a system test rack, providing more accurate results ahead of integration. One example of this approach is model-based testing, where the requirements are tested without waiting for the software or hardware readiness.

-4.png?width=631&height=354&name=MicrosoftTeams-image%20(44)-4.png)

Fig 6: Creation of new test instances-4.png?width=607&height=341&name=MicrosoftTeams-image%20(45)-4.png)

Fig 7: Nagarro’s E2E test automation tool

Each vehicle domain contributing to a customer feature is tested locally based on the hardware in the loop systems. Most of the E2E features’ testing is performed within the vehicle itself.

By catching potential problems at their nascent stage itself, OEMs can save time and resources while ensuring the safety and reliability of vehicles. It is important to understand and recognize the value of testing, prioritize it, and allocate the necessary resources to ensure that vehicle software is tested thoroughly and that any issues are addressed promptly. By doing so, the automotive industry can continue to drive innovation and bring cutting-edge technology to consumers while maintaining the highest standards of safety and reliability. Ultimately, early testing helps everyone and is vital in building confidence in the software and enhancing the overall user experience.